AI for Cybersecurity Is Advancing — But Trust Is Still the Missing Link

17 July 2025 • 2 min read

The Rise of AI in Security: Smart, Fast… Still Distrusted

AI is rapidly becoming a core force multiplier in cybersecurity — from threat detection and response, to user behavior modeling and real-time forensics.

But while the technology is ready, humans aren’t.

A recent report featured in Forbes CIO reveals a key gap:

Security teams don’t fully trust AI — especially when it acts autonomously.

And that hesitation is slowing adoption, particularly in environments where provable accuracy and policy transparency are non-negotiable.

Why AI Makes Sense for Security

AI is already transforming cyber defense across multiple layers:

- Detection & Prevention: AI models catch patterns and anomalies humans miss

- Automation: Reduces alert fatigue by triaging real threats within seconds

- Speed: Responds in real-time — outpacing manual intervention

- Threat Intelligence Correlation: Links logs, packets, and behaviors into clear signals

So what’s the issue?

The Trust Deficit

The problem isn’t capability — it’s clarity.

Security professionals — especially CISOs — remain hesitant because of AI’s “black box” behavior.

They’re asking:

- Why did the AI block this action?

- Can I audit that decision?

- Is this aligned with our compliance policies?

Without transparency and explainability, even effective AI becomes sidelined by security leadership.

“If your AI can’t show its work, it won’t earn a seat at the cyber war table.”

Cyber Protocol’s Position

We’ve integrated AI-driven tools into our Audit Engine, but with strict guardrails.

- Every automated action must be auditable and reversible

- AI decisions must come with clear justifications

- Our models are tuned for verifiable data, not guesses or hallucinations

Our analysts remain in control. The AI assists — it does not override.

What to Look for in Trustworthy AI

When evaluating cybersecurity platforms with AI features, ask:

- Does it explain why something was flagged or blocked?

- Can I trace decisions across logs and sessions?

- Is the model compatible with a zero-trust security posture?

If the answer is no, it’s not security — it’s risk.

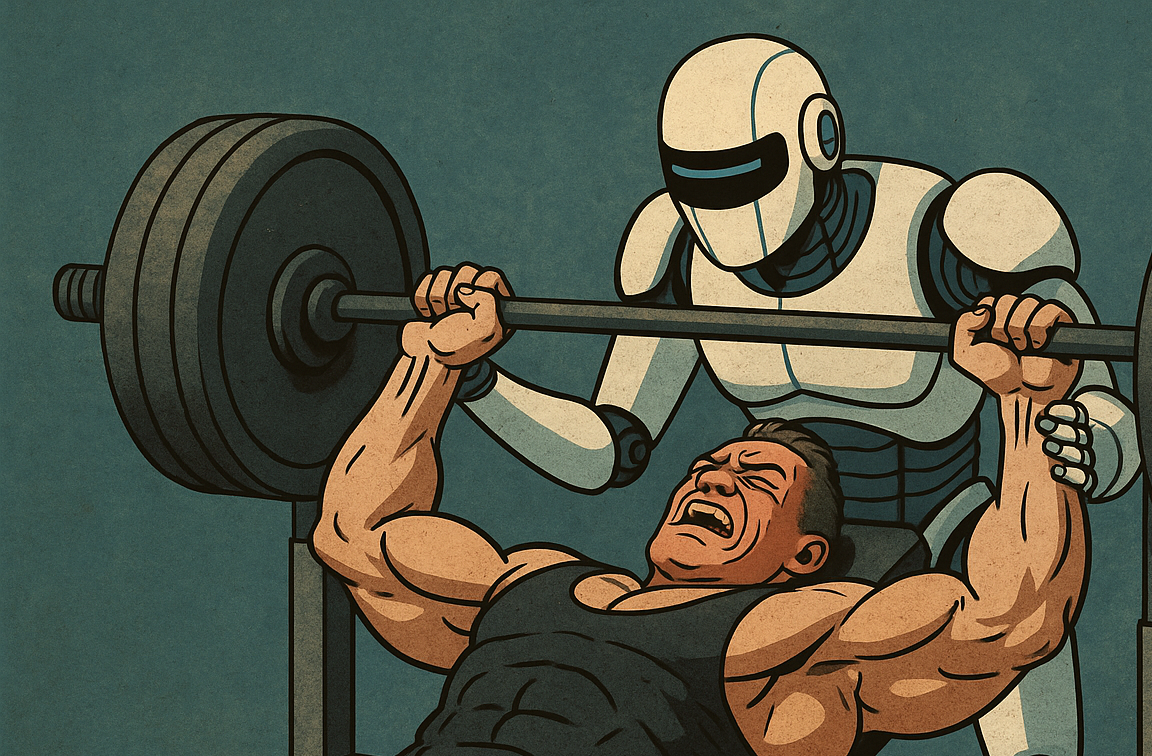

The Future: Human + AI, Not Human vs. AI

We believe the strongest cybersecurity posture in 2026 will be hybrid:

Human judgment amplified by machine precision.

That means explainability, transparency, and the ability to override.

Want to see how auditable AI works in practice?

Visit our platform and request a Cyber Protocol AI-Augmented Audit.

And please, don’t just automate. Verify.

— The Cyber Protocol Team